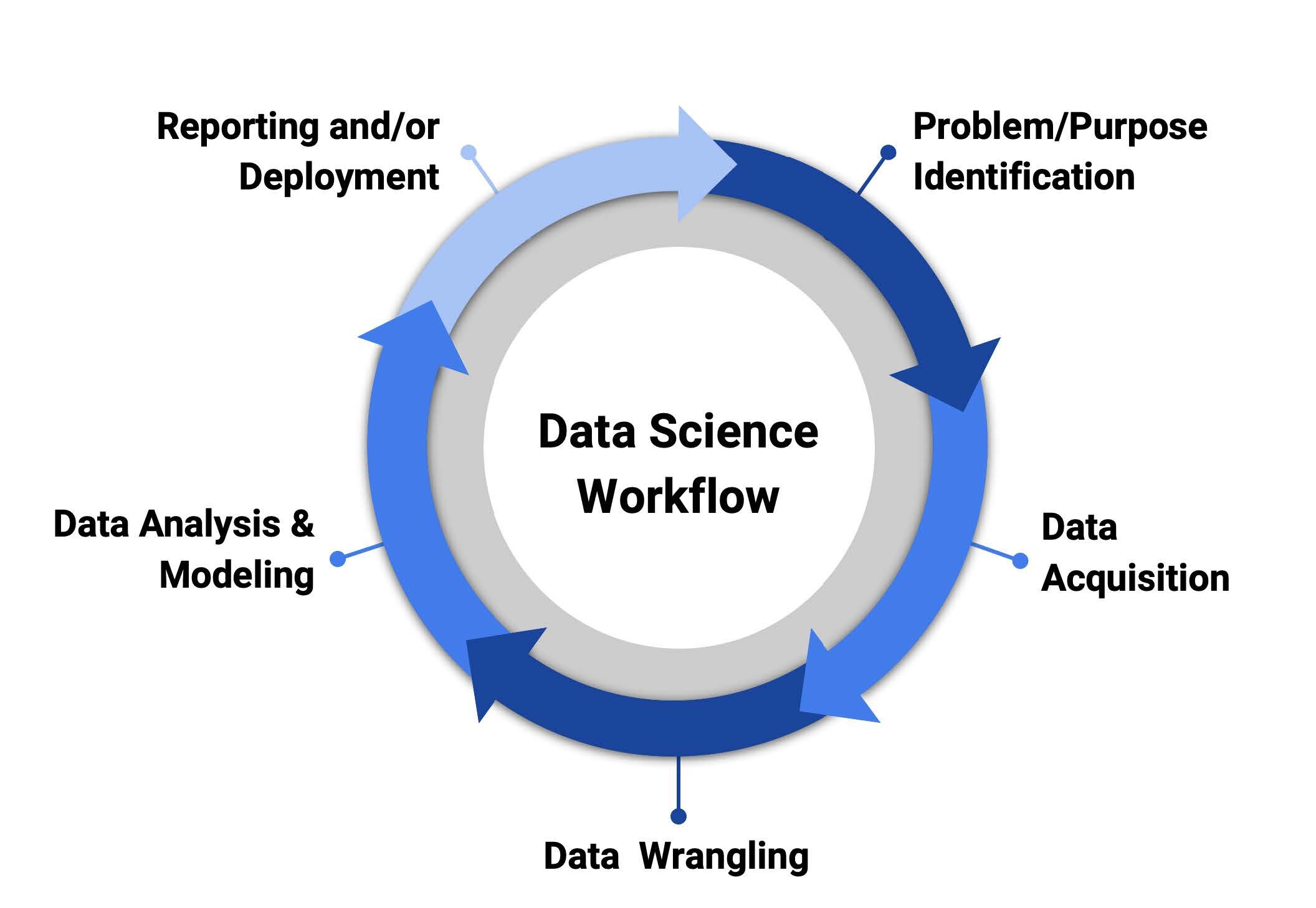

Data Science has emerged as a pivotal discipline in the age of information, enabling organizations to extract valuable insights from vast datasets. The Data Science workflow is a systematic process that involves multiple stages, each contributing to the overall journey of transforming raw data into actionable knowledge. In this comprehensive exploration, we delve into the intricacies of the Data Science workflow, dissecting each layer to uncover the essence of this dynamic field.

Introduction to Data Science Workflow

Definition and Purpose

At its core, Data Science is the art and science of transforming raw data into meaningful information. The Data Science workflow serves as a structured approach to achieve this goal, guiding practitioners through a series of steps to extract, clean, analyze, and interpret data.

Importance in Decision-Making

The significance of Data Science in modern decision-making cannot be overstated. Businesses leverage data-driven insights to gain a competitive edge, optimize operations, and make informed strategic decisions.

The Stages of the Data Science Workflow

Data Collection and Ingestion

The journey begins with the collection of raw data from diverse sources. This section explores the methods of data acquisition, including scraping, APIs, and databases. Ingestion techniques, such as ETL (Extract, Transform, Load), are crucial for preparing the data for further analysis.

Data Cleaning and Preprocessing

Clean, high-quality data is fundamental to meaningful analysis. Here, we unravel the processes of data cleaning and preprocessing, addressing issues such as missing values, outliers, and inconsistencies. Techniques like normalization and scaling are also discussed.

Exploratory Data Analysis (EDA)

EDA is the phase where data scientists uncover patterns, trends, and relationships within the dataset. This section explores statistical methods, visualization tools, and hypothesis testing used to gain a deeper understanding of the data.

Feature Engineering

Feature engineering involves selecting, transforming, or creating new features to enhance the predictive power of machine learning models. This segment discusses the art of crafting features that contribute meaningfully to the modeling process.

Model Development

Building predictive models is at the heart of Data Science. We delve into various algorithms, from traditional statistical models to machine learning and deep learning approaches. Model selection, training, and validation are explored in detail.

Model Evaluation and Fine-Tuning

After model development, it's crucial to evaluate its performance and fine-tune parameters for optimal results. This section covers metrics for assessing model performance and techniques like cross-validation.

Deployment and Integration

Once a model is deemed effective, deploying it into a real-world environment is the next step. This involves integrating the model into existing systems and monitoring its performance over time.

Communication of Results

Communicating findings effectively is key to the success of Data Science projects. Techniques for visualizing and presenting results in a clear and comprehensible manner are discussed.

Challenges and Ethical Considerations in Data Science

Ethical Concerns

Data Science is not without its ethical challenges. Issues related to privacy, bias, and the responsible use of data are explored, emphasizing the importance of ethical considerations throughout the workflow.

Handling Big Data

The era of Big Data presents unique challenges in terms of volume, velocity, and variety. This section discusses strategies for handling large datasets efficiently.

The Future of Data Science Workflow

Emerging Technologies

As technology evolves, so does the Data Science landscape. This section explores emerging technologies such as artificial intelligence, automated machine learning, and the integration of domain knowledge into the workflow.

Continuous Learning and Adaptation

The journey through the Data Science workflow is a continuous learning process. This final section emphasizes the importance of staying updated with industry trends, acquiring new skills, and adapting to the ever-evolving landscape of data-driven insights.

Conclusion

In conclusion, the Data Science workflow is a multifaceted journey encompassing data collection, analysis, modeling, and communication of results. By understanding each stage's nuances, practitioners can navigate the complexities of data-driven decision-making, unlocking the true potential of the information age. As we look to the future, embracing emerging technologies and ethical considerations will be paramount in shaping the evolution of Data Science workflows.

0 Comments